部署zookeeper(三节点)

1 | apiVersion: v1 |

部署Kafka(三节点)

1 | apiVersion: v1 |

检查所有pod启动无报错

1 | [root@k8s-h3c-master01 kafka]# kubectl get pod -n kafka |

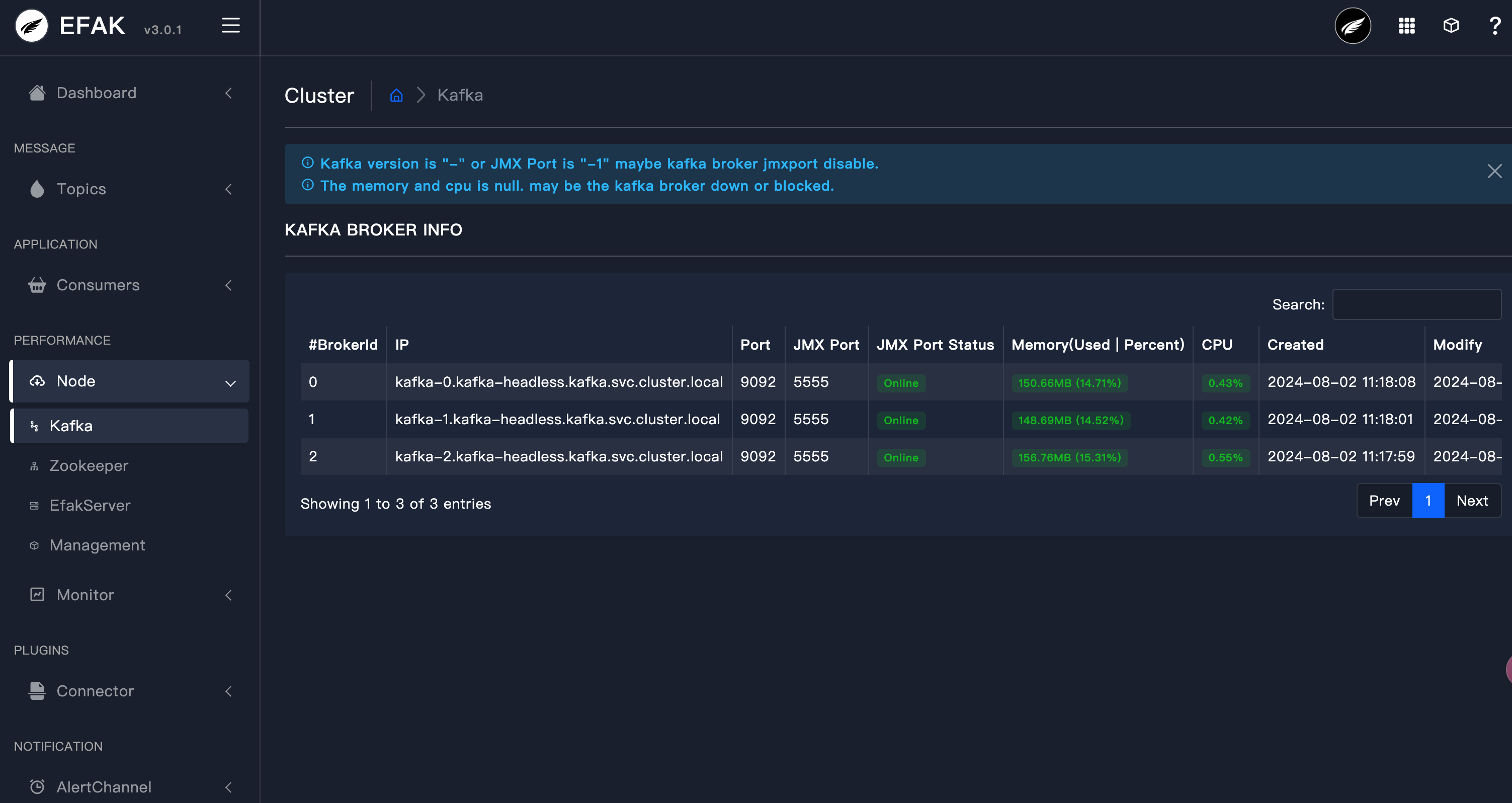

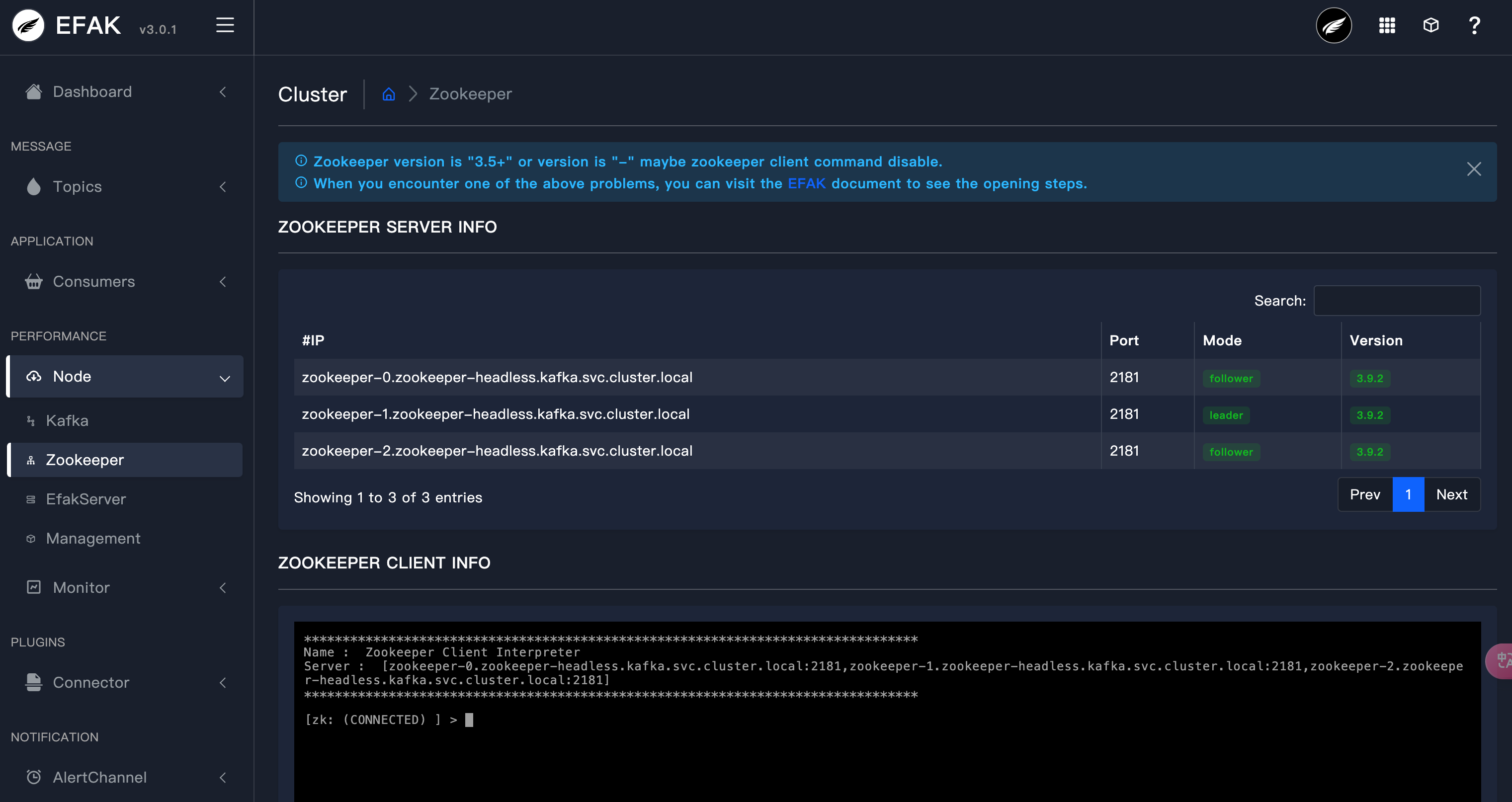

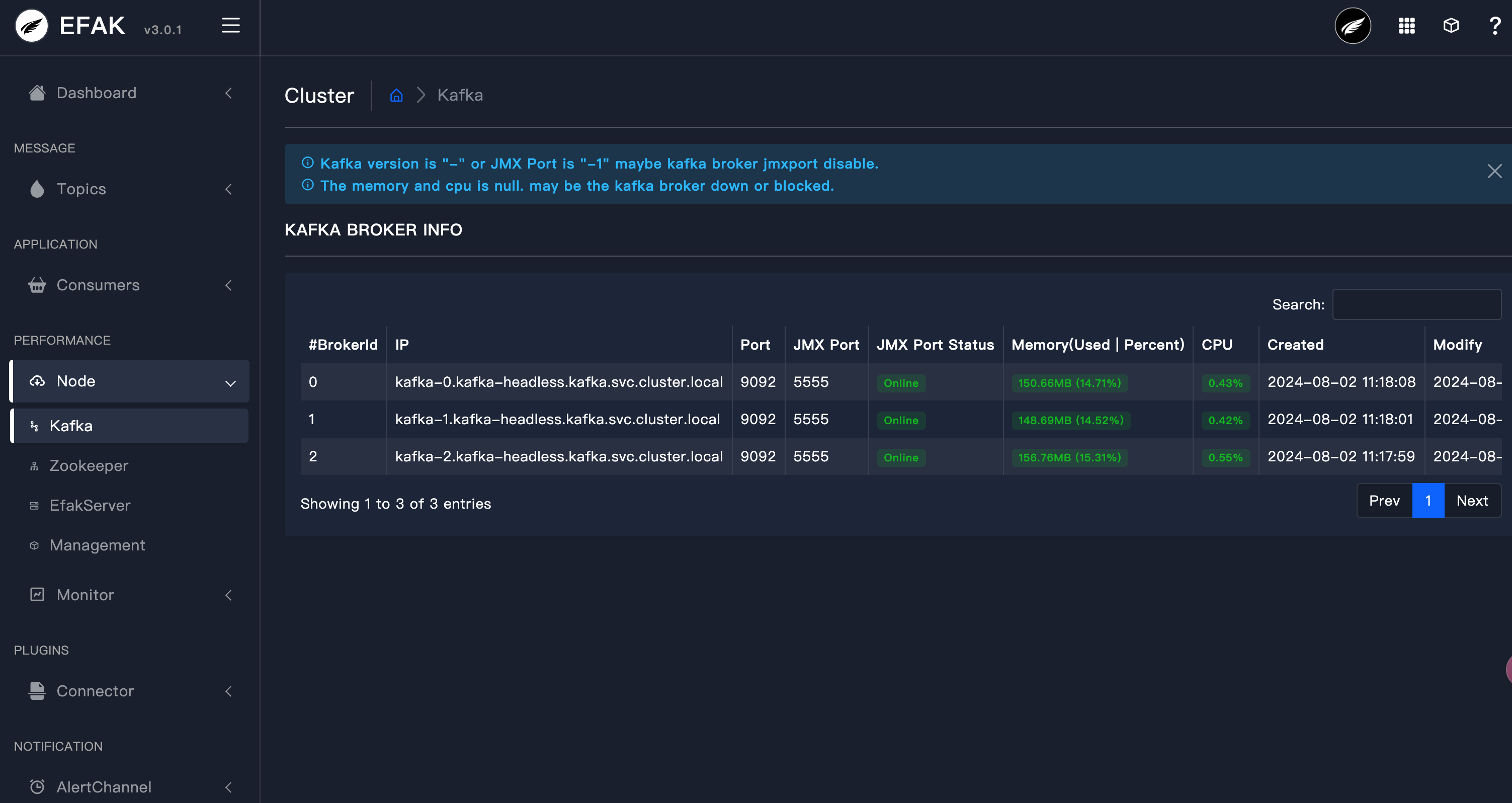

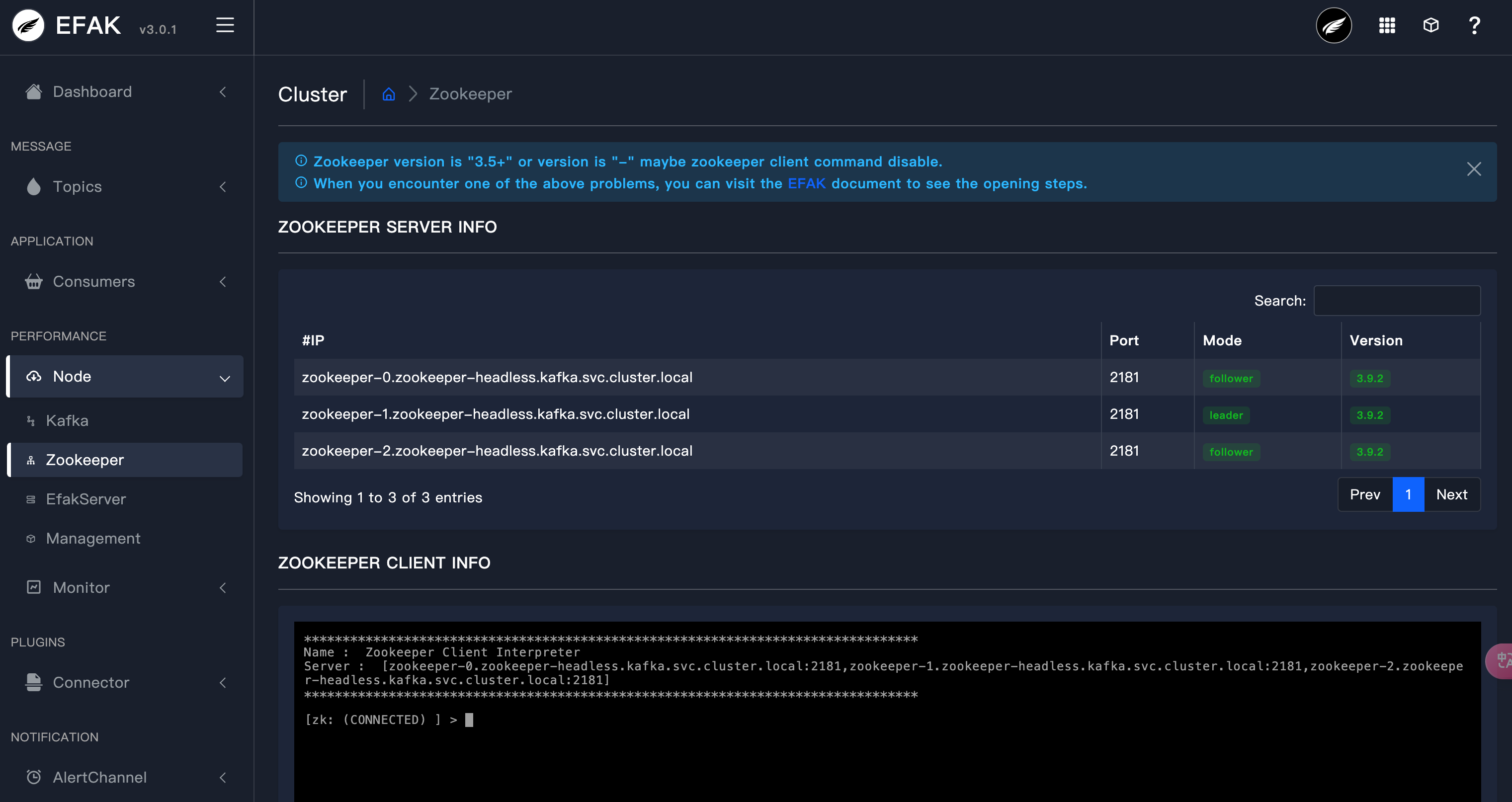

部署Kafka-eagle可视化

1 | apiVersion: apps/v1 |

验证

登录eagle,http://ip:30048

账户admin,密码123456

1 | apiVersion: v1 |

1 | apiVersion: v1 |

1 | [root@k8s-h3c-master01 kafka]# kubectl get pod -n kafka |

1 | apiVersion: apps/v1 |

登录eagle,http://ip:30048

账户admin,密码123456