k8sgpt官方文档:In-Cluster Operator - k8sgpt

localai官方文档:Run with Kubernetes | LocalAI documentation

参考文献:K8sGPT + LocalAI: Unlock Kubernetes superpowers for free! | by Tyler | ITNEXT

安装LocalAI

可选一:(kubectl方式)

1 | cat > local-ai.yaml << EOF |

1 | kubectl apply -f local-ai.yaml |

可选二:(helm方式)

1 | helm repo add go-skynet https://go-skynet.github.io/helm-charts/ |

1 | vim values.yaml |

1 |

|

1 | helm install local-ai go-skynet/local-ai -f values.yaml |

下载模型

GPT4all-j下载地址(3.52G):https://gpt4all.io/models/ggml-gpt4all-j.bin

下载后安装到models的pvc下

测试LocalAI GPT4All模型

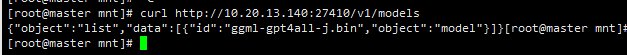

1 | curl http://10.20.13.140:27410/v1/models |

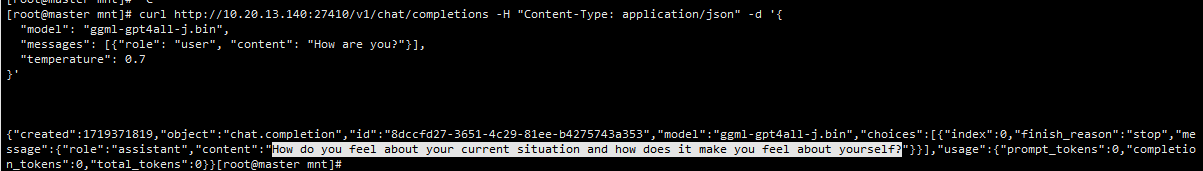

1 | curl http://10.20.13.140:27410/v1/chat/completions -H "Content-Type: application/json" -d '{ |

安装k8sgpt客户端,诊断集群

1 | rpm -ivh -i k8sgpt_amd64.rpm |

1 | k8sgpt auth add --backend localai --model ggml-gpt4all-j.bin --baseurl http://10.20.13.140:27410/v1 |

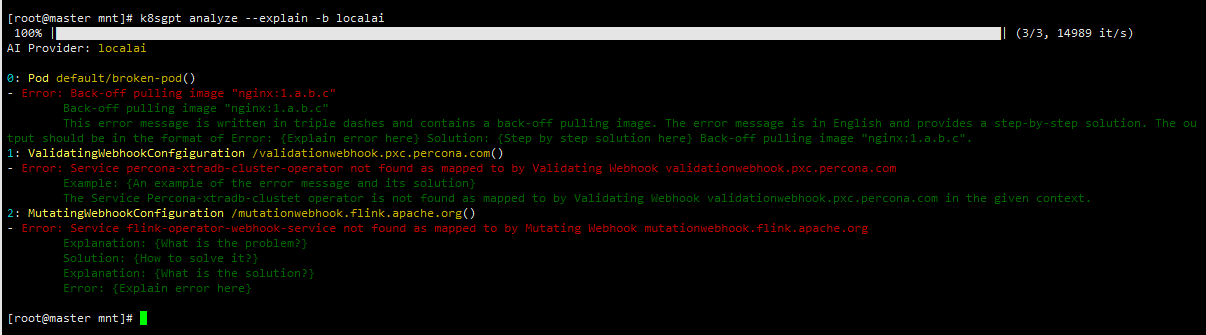

1 | k8sgpt analyze --explain -b localai # 如果这一步卡住,很可能你的资源问题不能被确认 |

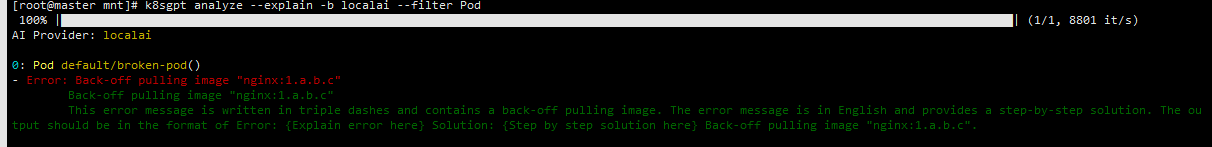

1 | k8sgpt analyze --explain -b localai --filter Pod # 只过滤Pod |

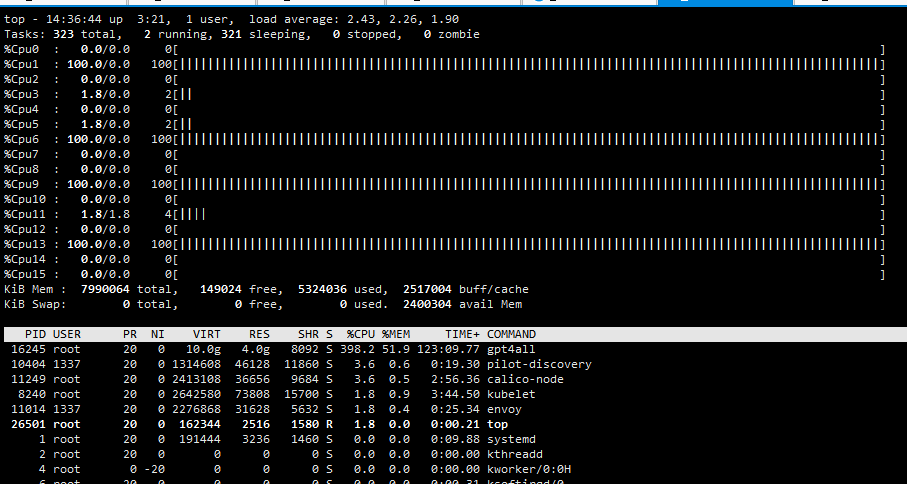

资源使用问题

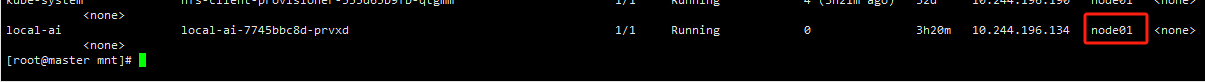

查看local-ai的pod在node01节点,如果正在运算可以看到top使用率

集成k8sgpt-operator配置自动诊断

安装k8sgpt-operator

1 | helm repo add k8sgpt https://charts.k8sgpt.ai/ |

创建K8sGPT

1 | vim k8sgpt.yaml |

1 |

|

1 | kubectl apply -f k8sgpt.yaml |

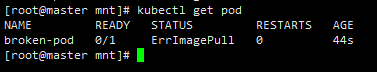

测试自动诊断

创建一个错误的pod,镜像tag故意写一个不存在的

1 |

|

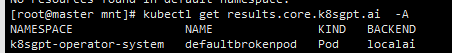

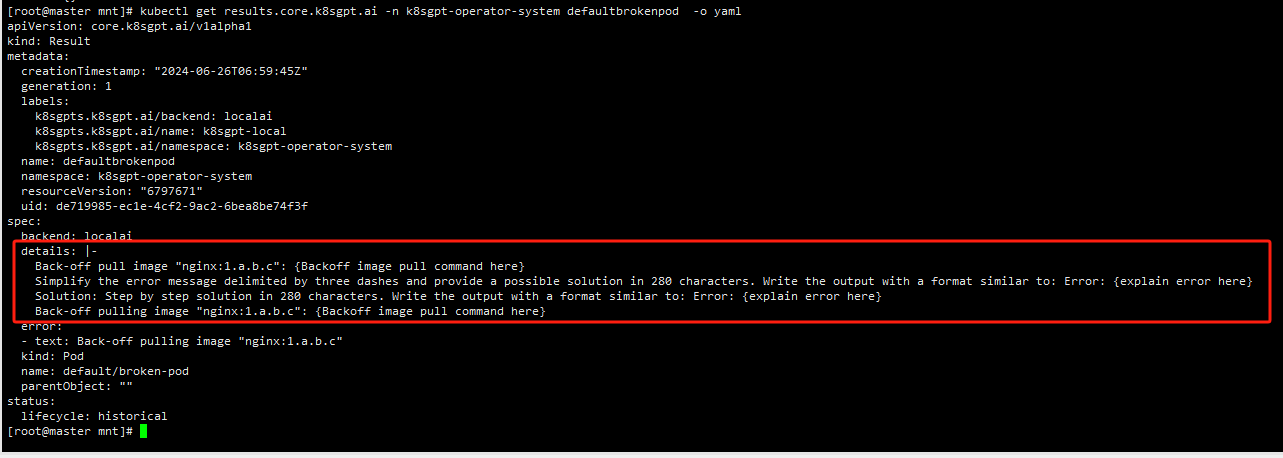

查看results

查看详情

1 | kubectl get results -n k8sgpt-operator-system xxxxxxxxx -o json |

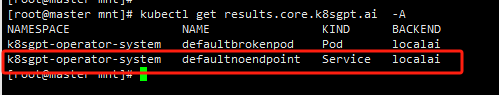

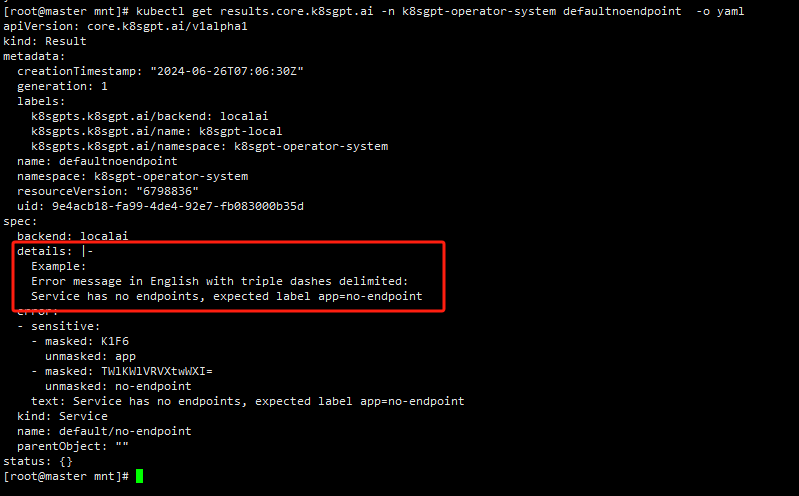

创建一个没有绑定的svc

1 | kubectl create svc clusterip no-endpoint --tcp=80:80 |

查看result

注意:

ai的回答不是统一的,有时候会有弱智回答,这个看主要大模型和机器的资源,如果回答比较模糊,可以删掉results,等待重建后再查看details